parent

89553cfafa

commit

286534188a

4 changed files with 429 additions and 394 deletions

File diff suppressed because one or more lines are too long

File diff suppressed because one or more lines are too long

819

generate_map.py

819

generate_map.py

|

|

@ -2,6 +2,7 @@

|

|||

#

|

||||

# https://git.kabelsalat.ch/s3lph/erfamap

|

||||

|

||||

import abc

|

||||

import os

|

||||

import urllib.request

|

||||

import urllib.parse

|

||||

|

|

@ -54,74 +55,40 @@ class OutputPaths:

|

|||

return os.path.relpath(path, start=self.path)

|

||||

|

||||

|

||||

ERFA_URL = 'https://doku.ccc.de/index.php?title=Spezial:Semantische_Suche&x=-5B-5BKategorie%3AErfa-2DKreise-5D-5D-20-5B-5BChaostreff-2DActive%3A%3Awahr-5D-5D%2F-3FChaostreff-2DCity%2F-3FChaostreff-2DPhysical-2DAddress%2F-3FChaostreff-2DPhysical-2DHousenumber%2F-3FChaostreff-2DPhysical-2DPostcode%2F-3FChaostreff-2DPhysical-2DCity%2F-3FChaostreff-2DCountry%2F-3FPublic-2DWeb%2F-3FChaostreff-2DLongname%2F-3FChaostreff-2DNickname%2F-3FChaostreff-2DRealname&format=json&limit=200&link=all&headers=show&searchlabel=JSON&class=sortable+wikitable+smwtable&sort=&order=asc&offset=0&mainlabel=&prettyprint=true&unescape=true'

|

||||

class CoordinateTransform:

|

||||

|

||||

CHAOSTREFF_URL = 'https://doku.ccc.de/index.php?title=Spezial:Semantische_Suche&x=-5B-5BKategorie%3AChaostreffs-5D-5D-20-5B-5BChaostreff-2DActive%3A%3Awahr-5D-5D%2F-3FChaostreff-2DCity%2F-3FChaostreff-2DPhysical-2DAddress%2F-3FChaostreff-2DPhysical-2DHousenumber%2F-3FChaostreff-2DPhysical-2DPostcode%2F-3FChaostreff-2DPhysical-2DCity%2F-3FChaostreff-2DCountry%2F-3FPublic-2DWeb%2F-3FChaostreff-2DLongname%2F-3FChaostreff-2DNickname%2F-3FChaostreff-2DRealname&format=json&limit=200&link=all&headers=show&searchlabel=JSON&class=sortable+wikitable+smwtable&sort=&order=asc&offset=0&mainlabel=&prettyprint=true&unescape=true'

|

||||

def __init__(self, projection: str):

|

||||

self._proj = pyproj.Transformer.from_crs('epsg:4326', projection)

|

||||

self.setup()

|

||||

|

||||

def setup(self, scalex=1.0, scaley=1.0, bbox=[(-180, -90), (180, 90)]):

|

||||

self._scalex = scalex

|

||||

self._scaley = scaley

|

||||

west = min(bbox[0][0], bbox[1][0])

|

||||

north = max(bbox[0][1], bbox[1][1])

|

||||

east = max(bbox[0][0], bbox[1][0])

|

||||

south = min(bbox[0][1], bbox[1][1])

|

||||

self._ox, self._oy = self._proj.transform(west, north)

|

||||

return self(east, south)

|

||||

|

||||

def __call__(self, lon, lat):

|

||||

xt, yt = self._proj.transform(lon, lat)

|

||||

return (

|

||||

(xt - self._ox) * self._scalex,

|

||||

(self._oy - yt) * self._scaley

|

||||

)

|

||||

|

||||

|

||||

def sparql_query(query):

|

||||

headers = {

|

||||

'Content-Type': 'application/x-www-form-urlencoded',

|

||||

'User-Agent': USER_AGENT,

|

||||

'Accept': 'application/sparql-results+json',

|

||||

}

|

||||

body = urllib.parse.urlencode({'query': query}).encode()

|

||||

req = urllib.request.Request('https://query.wikidata.org/sparql', headers=headers, data=body)

|

||||

with urllib.request.urlopen(req) as resp:

|

||||

resultset = json.load(resp)

|

||||

results = {}

|

||||

for r in resultset.get('results', {}).get('bindings'):

|

||||

basename = None

|

||||

url = None

|

||||

for k, v in r.items():

|

||||

if k == 'item':

|

||||

basename = os.path.basename(v['value'])

|

||||

elif k == 'map':

|

||||

url = v['value']

|

||||

results[basename] = url

|

||||

return results

|

||||

class Drawable(abc.ABC):

|

||||

|

||||

def render(self, svg, texts):

|

||||

pass

|

||||

|

||||

|

||||

def fetch_geoshapes(target, shape_urls):

|

||||

os.makedirs(target, exist_ok=True)

|

||||

candidates = {}

|

||||

keep = {}

|

||||

for item, url in tqdm.tqdm(shape_urls.items()):

|

||||

try:

|

||||

with urllib.request.urlopen(url) as resp:

|

||||

shape = json.load(resp)

|

||||

if not shape.get('license', 'proprietary').startswith('CC0-'):

|

||||

# Only include public domain data

|

||||

continue

|

||||

candidates.setdefault(item, []).append(shape)

|

||||

except urllib.error.HTTPError as e:

|

||||

print(e)

|

||||

for item, ican in candidates.items():

|

||||

# Prefer zoom level 4

|

||||

keep[item] = min(ican, key=lambda x: abs(4-x.get('zoom', 1000)))

|

||||

for item, shape in keep.items():

|

||||

with open(os.path.join(target, item + '.json'), 'w') as f:

|

||||

json.dump(shape, f)

|

||||

class Country(Drawable):

|

||||

|

||||

|

||||

def fetch_wikidata_states(target):

|

||||

shape_urls = sparql_query('''

|

||||

|

||||

PREFIX wd: <http://www.wikidata.org/entity/>

|

||||

PREFIX wdt: <http://www.wikidata.org/prop/direct/>

|

||||

|

||||

SELECT DISTINCT ?item ?map WHERE {

|

||||

# ?item is instance of federal state of germany and has geoshape ?map

|

||||

?item wdt:P31 wd:Q1221156;

|

||||

wdt:P3896 ?map

|

||||

}

|

||||

''')

|

||||

print('Retrieving state border shapes')

|

||||

fetch_geoshapes(target, shape_urls)

|

||||

|

||||

|

||||

def fetch_wikidata_countries(target):

|

||||

shape_urls = sparql_query('''

|

||||

SVG_CLASS = 'country'

|

||||

SPARQL_QUERY = '''

|

||||

|

||||

PREFIX wd: <http://www.wikidata.org/entity/>

|

||||

PREFIX wdt: <http://www.wikidata.org/prop/direct/>

|

||||

|

|

@ -142,134 +109,313 @@ SELECT DISTINCT ?item ?map WHERE {

|

|||

FILTER (?stateclass = wd:Q6256 || ?stateclass = wd:Q3624078).

|

||||

FILTER (?euroclass = wd:Q46 || ?euroclass = wd:Q8932).

|

||||

}

|

||||

''')

|

||||

print('Retrieving country border shapes')

|

||||

fetch_geoshapes(target, shape_urls)

|

||||

'''

|

||||

|

||||

def __init__(self, ns, name, polygons):

|

||||

self.name = name

|

||||

self.polygons = [[ns.projection(lon, lat) for lon, lat in p] for p in polygons]

|

||||

|

||||

def filter_boundingbox(source, target, bbox):

|

||||

files = os.listdir(source)

|

||||

os.makedirs(target, exist_ok=True)

|

||||

print('Filtering countries outside the bounding box')

|

||||

for f in tqdm.tqdm(files):

|

||||

if not f.endswith('.json') or 'Q183.json' in f:

|

||||

continue

|

||||

path = os.path.join(source, f)

|

||||

with open(path, 'r') as sf:

|

||||

shapedata = sf.read()

|

||||

shape = json.loads(shapedata)

|

||||

keep = False

|

||||

geo = shape['data']['features'][0]['geometry']

|

||||

if geo['type'] == 'Polygon':

|

||||

geo['coordinates'] = [geo['coordinates']]

|

||||

for poly in geo['coordinates']:

|

||||

for point in poly[0]:

|

||||

if point[0] >= bbox[0][0] and point[1] >= bbox[0][1] \

|

||||

and point[0] <= bbox[1][0] and point[1] <= bbox[1][1]:

|

||||

keep = True

|

||||

def __len__(self):

|

||||

return sum([len(p) for p in self.polygons])

|

||||

|

||||

def render(self, svg, texts):

|

||||

for polygon in self.polygons:

|

||||

points = ' '.join([f'{x},{y}' for x, y in polygon])

|

||||

poly = etree.Element('polygon', points=points)

|

||||

poly.set('class', self.__class__.SVG_CLASS)

|

||||

poly.set('data-country', self.name)

|

||||

svg.append(poly)

|

||||

|

||||

@classmethod

|

||||

def sparql_query(cls):

|

||||

headers = {

|

||||

'Content-Type': 'application/x-www-form-urlencoded',

|

||||

'User-Agent': USER_AGENT,

|

||||

'Accept': 'application/sparql-results+json',

|

||||

}

|

||||

body = urllib.parse.urlencode({'query': cls.SPARQL_QUERY}).encode()

|

||||

req = urllib.request.Request('https://query.wikidata.org/sparql', headers=headers, data=body)

|

||||

with urllib.request.urlopen(req) as resp:

|

||||

resultset = json.load(resp)

|

||||

results = {}

|

||||

for r in resultset.get('results', {}).get('bindings'):

|

||||

basename = None

|

||||

url = None

|

||||

for k, v in r.items():

|

||||

if k == 'item':

|

||||

basename = os.path.basename(v['value'])

|

||||

elif k == 'map':

|

||||

url = v['value']

|

||||

results[basename] = url

|

||||

return results

|

||||

|

||||

@classmethod

|

||||

def fetch(cls, target):

|

||||

os.makedirs(target, exist_ok=True)

|

||||

shape_urls = cls.sparql_query()

|

||||

candidates = {}

|

||||

keep = {}

|

||||

for item, url in tqdm.tqdm(shape_urls.items()):

|

||||

try:

|

||||

with urllib.request.urlopen(url) as resp:

|

||||

shape = json.load(resp)

|

||||

if not shape.get('license', 'proprietary').startswith('CC0-'):

|

||||

# Only include public domain data

|

||||

continue

|

||||

candidates.setdefault(item, []).append(shape)

|

||||

except urllib.error.HTTPError as e:

|

||||

print(e)

|

||||

for item, ican in candidates.items():

|

||||

# Prefer zoom level 4

|

||||

keep[item] = min(ican, key=lambda x: abs(4-x.get('zoom', 1000)))

|

||||

for item, shape in keep.items():

|

||||

with open(os.path.join(target, item + '.json'), 'w') as f:

|

||||

json.dump(shape, f)

|

||||

|

||||

@classmethod

|

||||

def from_cache(cls, ns, source):

|

||||

countries = []

|

||||

files = os.listdir(source)

|

||||

for f in files:

|

||||

if not f.endswith('.json'):

|

||||

continue

|

||||

path = os.path.join(source, f)

|

||||

with open(path, 'r') as sf:

|

||||

shapedata = sf.read()

|

||||

shape = json.loads(shapedata)

|

||||

name = shape['description']['en']

|

||||

geo = shape['data']['features'][0]['geometry']

|

||||

if geo['type'] == 'Polygon':

|

||||

geo['coordinates'] = [geo['coordinates']]

|

||||

polygons = []

|

||||

for poly in geo['coordinates']:

|

||||

polygons.append(poly[0])

|

||||

countries.append(cls(ns, name, polygons))

|

||||

return countries

|

||||

|

||||

@classmethod

|

||||

def filter_boundingbox(cls, ns, source, target, bbox):

|

||||

files = os.listdir(source)

|

||||

os.makedirs(target, exist_ok=True)

|

||||

for f in tqdm.tqdm(files):

|

||||

if not f.endswith('.json') or 'Q183.json' in f:

|

||||

continue

|

||||

path = os.path.join(source, f)

|

||||

with open(path, 'r') as sf:

|

||||

shapedata = sf.read()

|

||||

shape = json.loads(shapedata)

|

||||

keep = False

|

||||

geo = shape['data']['features'][0]['geometry']

|

||||

if geo['type'] == 'Polygon':

|

||||

geo['coordinates'] = [geo['coordinates']]

|

||||

for poly in geo['coordinates']:

|

||||

for point in poly[0]:

|

||||

if point[0] >= bbox[0][0] and point[1] >= bbox[0][1] \

|

||||

and point[0] <= bbox[1][0] and point[1] <= bbox[1][1]:

|

||||

keep = True

|

||||

break

|

||||

if keep:

|

||||

break

|

||||

if keep:

|

||||

break

|

||||

if keep:

|

||||

with open(os.path.join(target, f), 'w') as sf:

|

||||

sf.write(shapedata)

|

||||

with open(os.path.join(target, f), 'w') as sf:

|

||||

sf.write(shapedata)

|

||||

|

||||

|

||||

def address_lookup(name, erfa):

|

||||

locator = Nominatim(user_agent=USER_AGENT)

|

||||

number = erfa['Chaostreff-Physical-Housenumber']

|

||||

street = erfa['Chaostreff-Physical-Address']

|

||||

zipcode = erfa['Chaostreff-Physical-Postcode']

|

||||

acity = erfa['Chaostreff-Physical-City']

|

||||

city = erfa['Chaostreff-City'][0]

|

||||

country = erfa['Chaostreff-Country'][0]

|

||||

|

||||

# Try the most accurate address first, try increasingly inaccurate addresses on failure.

|

||||

formats = [

|

||||

# Muttenz, Schweiz

|

||||

f'{city}, {country}'

|

||||

]

|

||||

if zipcode and acity:

|

||||

# 4132 Muttenz, Schweiz

|

||||

formats.insert(0, f'{zipcode[0]} {acity[0]}, {country}')

|

||||

if zipcode and acity and number and street:

|

||||

# Birsfelderstrasse 6, 4132 Muttenz, Schweiz

|

||||

formats.insert(0, f'{street[0]} {number[0]}, {zipcode[0]} {acity[0]}, {country}')

|

||||

class FederalState(Country):

|

||||

|

||||

for fmt in formats:

|

||||

response = locator.geocode(fmt)

|

||||

if response is not None:

|

||||

return response.longitude, response.latitude

|

||||

SVG_CLASS = 'state'

|

||||

SPARQL_QUERY = '''

|

||||

|

||||

print(f'No location found for {name}, tried the following address formats:')

|

||||

for fmt in formats:

|

||||

print(f' {fmt}')

|

||||

return None

|

||||

PREFIX wd: <http://www.wikidata.org/entity/>

|

||||

PREFIX wdt: <http://www.wikidata.org/prop/direct/>

|

||||

|

||||

SELECT DISTINCT ?item ?map WHERE {

|

||||

# ?item is instance of federal state of germany and has geoshape ?map

|

||||

?item wdt:P31 wd:Q1221156;

|

||||

wdt:P3896 ?map

|

||||

}

|

||||

'''

|

||||

|

||||

|

||||

def fetch_erfas(target, url):

|

||||

userpw = os.getenv('DOKU_CCC_DE_BASICAUTH')

|

||||

if userpw is None:

|

||||

print('Please set environment variable DOKU_CCC_DE_BASICAUTH=username:password')

|

||||

exit(1)

|

||||

auth = base64.b64encode(userpw.encode()).decode()

|

||||

erfas = {}

|

||||

req = urllib.request.Request(url, headers={'Authorization': f'Basic {auth}'})

|

||||

with urllib.request.urlopen(req) as resp:

|

||||

erfadata = json.loads(resp.read().decode())

|

||||

print('Looking up addresses')

|

||||

for name, erfa in tqdm.tqdm(erfadata['results'].items()):

|

||||

location = address_lookup(name, erfa['printouts'])

|

||||

if location is None:

|

||||

print(f'WARNING: No location for {name}')

|

||||

city = erfa['printouts']['Chaostreff-City'][0]

|

||||

erfas[city] = {'location': location}

|

||||

if len(erfa['printouts']['Public-Web']) > 0:

|

||||

erfas[city]['web'] = erfa['printouts']['Public-Web'][0]

|

||||

if len(erfa['printouts']['Chaostreff-Longname']) > 0:

|

||||

erfas[city]['name'] = erfa['printouts']['Chaostreff-Longname'][0]

|

||||

elif len(erfa['printouts']['Chaostreff-Nickname']) > 0:

|

||||

erfas[city]['name'] = erfa['printouts']['Chaostreff-Nickname'][0]

|

||||

elif len(erfa['printouts']['Chaostreff-Realname']) > 0:

|

||||

erfas[city]['name'] = erfa['printouts']['Chaostreff-Realname'][0]

|

||||

class Erfa(Drawable):

|

||||

|

||||

SMW_URL = 'https://doku.ccc.de/index.php?title=Spezial:Semantische_Suche&x=-5B-5BKategorie%3A{category}-5D-5D-20-5B-5BChaostreff-2DActive%3A%3Awahr-5D-5D%2F-3FChaostreff-2DCity%2F-3FChaostreff-2DPhysical-2DAddress%2F-3FChaostreff-2DPhysical-2DHousenumber%2F-3FChaostreff-2DPhysical-2DPostcode%2F-3FChaostreff-2DPhysical-2DCity%2F-3FChaostreff-2DCountry%2F-3FPublic-2DWeb%2F-3FChaostreff-2DLongname%2F-3FChaostreff-2DNickname%2F-3FChaostreff-2DRealname&format=json&limit={limit}&link=all&headers=show&searchlabel=JSON&class=sortable+wikitable+smwtable&sort=&order=asc&offset={offset}&mainlabel=&prettyprint=true&unescape=true'

|

||||

|

||||

SMW_REQUEST_LIMIT = 50

|

||||

|

||||

SMW_CATEGORY = 'Erfa-2DKreise'

|

||||

|

||||

SVG_CLASS = 'erfa'

|

||||

SVG_DATA = 'data-erfa'

|

||||

SVG_LABEL = True

|

||||

SVG_LABELCLASS = 'erfalabel'

|

||||

|

||||

def __init__(self, ns, name, city, lon, lat, display_name=None, web=None, radius=15):

|

||||

self.name = name

|

||||

self.city = city

|

||||

self.lon = lon

|

||||

self.lat = lat

|

||||

self.x, self.y = ns.projection(lon, lat)

|

||||

self.display_name = display_name if display_name is not None else name

|

||||

self.web = web

|

||||

self.radius = radius

|

||||

|

||||

def __eq__(self, o):

|

||||

if not isinstance(o, Erfa):

|

||||

return False

|

||||

return self.name == o.name

|

||||

|

||||

def render(self, svg, texts):

|

||||

cls = self.__class__

|

||||

if self.web is not None:

|

||||

group = etree.Element('a', href=self.web, target='_blank')

|

||||

else:

|

||||

erfas[city]['name'] = name

|

||||

group = etree.Element('g')

|

||||

group.set(cls.SVG_DATA, self.city)

|

||||

circle = etree.Element('circle', cx=str(self.x), cy=str(self.y), r=str(self.radius))

|

||||

circle.set('class', cls.SVG_CLASS)

|

||||

circle.set(cls.SVG_DATA, self.city)

|

||||

title = etree.Element('title')

|

||||

title.text = self.display_name

|

||||

circle.append(title)

|

||||

group.append(circle)

|

||||

if self.city in texts:

|

||||

box = texts[self.city]

|

||||

text = etree.Element('text', x=str(box.left), y=str(box.top + box.meta['baseline']))

|

||||

text.set('class', cls.SVG_LABELCLASS)

|

||||

text.set(cls.SVG_DATA, self.city)

|

||||

text.text = box.meta['text']

|

||||

group.append(text)

|

||||

svg.append(group)

|

||||

|

||||

@classmethod

|

||||

def address_lookup(cls, attr):

|

||||

locator = Nominatim(user_agent=USER_AGENT)

|

||||

number = attr['Chaostreff-Physical-Housenumber']

|

||||

street = attr['Chaostreff-Physical-Address']

|

||||

zipcode = attr['Chaostreff-Physical-Postcode']

|

||||

acity = attr['Chaostreff-Physical-City']

|

||||

city = attr['Chaostreff-City'][0]

|

||||

country = attr['Chaostreff-Country'][0]

|

||||

|

||||

with open(target, 'w') as f:

|

||||

json.dump(erfas, f)

|

||||

|

||||

|

||||

def compute_bbox(ns):

|

||||

if ns.bbox is not None:

|

||||

return [

|

||||

(min(ns.bbox[0], ns.bbox[2]), min(ns.bbox[1], ns.bbox[3])),

|

||||

(max(ns.bbox[0], ns.bbox[2]), max(ns.bbox[1], ns.bbox[3]))

|

||||

# Try the most accurate address first, try increasingly inaccurate addresses on failure.

|

||||

formats = [

|

||||

# Muttenz, Schweiz

|

||||

f'{city}, {country}'

|

||||

]

|

||||

if zipcode and acity:

|

||||

# 4132 Muttenz, Schweiz

|

||||

formats.insert(0, f'{zipcode[0]} {acity[0]}, {country}')

|

||||

if zipcode and acity and number and street:

|

||||

# Birsfelderstrasse 6, 4132 Muttenz, Schweiz

|

||||

formats.insert(0, f'{street[0]} {number[0]}, {zipcode[0]} {acity[0]}, {country}')

|

||||

|

||||

print('Computing map bounding box')

|

||||

bounds = []

|

||||

for path in tqdm.tqdm([ns.cache_directory.erfa_info, ns.cache_directory.chaostreff_info]):

|

||||

with open(path, 'r') as f:

|

||||

erfadata = json.load(f)

|

||||

for data in erfadata.values():

|

||||

if 'location' not in data:

|

||||

continue

|

||||

lon, lat = data['location']

|

||||

if len(bounds) == 0:

|

||||

bounds.append(lon)

|

||||

bounds.append(lat)

|

||||

bounds.append(lon)

|

||||

bounds.append(lat)

|

||||

else:

|

||||

bounds[0] = min(bounds[0], lon)

|

||||

bounds[1] = min(bounds[1], lat)

|

||||

bounds[2] = max(bounds[2], lon)

|

||||

bounds[3] = max(bounds[3], lat)

|

||||

return [

|

||||

(bounds[0] - ns.bbox_margin, bounds[1] - ns.bbox_margin),

|

||||

(bounds[2] + ns.bbox_margin, bounds[3] + ns.bbox_margin)

|

||||

]

|

||||

for fmt in formats:

|

||||

response = locator.geocode(fmt)

|

||||

if response is not None:

|

||||

return response.longitude, response.latitude

|

||||

|

||||

print(f'No location found for {name}, tried the following address formats:')

|

||||

for fmt in formats:

|

||||

print(f' {fmt}')

|

||||

return None

|

||||

|

||||

@classmethod

|

||||

def from_api(cls, name, attr, radius, ns):

|

||||

city = attr['Chaostreff-City'][0]

|

||||

location = cls.address_lookup(attr)

|

||||

if location is None:

|

||||

raise ValueError(f'No location for {name}')

|

||||

lon, lat = location

|

||||

# There are up to 4 different names: 3 SMW attrs and the page name

|

||||

if len(attr['Chaostreff-Longname']) > 0:

|

||||

display_name = attr['Chaostreff-Longname'][0]

|

||||

elif len(attr['Chaostreff-Nickname']) > 0:

|

||||

display_name = attr['Chaostreff-Nickname'][0]

|

||||

elif len(attr['Chaostreff-Realname']) > 0:

|

||||

display_name = attr['Chaostreff-Realname'][0]

|

||||

else:

|

||||

display_name = name

|

||||

if len(attr['Public-Web']) > 0:

|

||||

web = attr['Public-Web'][0]

|

||||

else:

|

||||

web = None

|

||||

return cls(ns, name, city, lon, lat, display_name, web, radius)

|

||||

|

||||

def to_dict(self):

|

||||

return {

|

||||

'name': self.name,

|

||||

'city': self.city,

|

||||

'web': self.web,

|

||||

'display_name': self.display_name,

|

||||

'location': [self.lon, self.lat]

|

||||

}

|

||||

|

||||

@classmethod

|

||||

def from_dict(cls, ns, dct, radius):

|

||||

name = dct['name']

|

||||

city = dct['city']

|

||||

lon, lat = dct['location']

|

||||

display_name = dct.get('display_name', name)

|

||||

web = dct.get('web')

|

||||

radius = radius

|

||||

return Erfa(ns, name, city, lon, lat, display_name, web, radius)

|

||||

|

||||

@classmethod

|

||||

def fetch(cls, ns, target, radius):

|

||||

userpw = os.getenv('DOKU_CCC_DE_BASICAUTH')

|

||||

if userpw is None:

|

||||

print('Please set environment variable DOKU_CCC_DE_BASICAUTH=username:password')

|

||||

exit(1)

|

||||

auth = base64.b64encode(userpw.encode()).decode()

|

||||

erfas = []

|

||||

|

||||

offset = 0

|

||||

while True:

|

||||

url = cls.SMW_URL.format(

|

||||

category=cls.SMW_CATEGORY,

|

||||

limit=cls.SMW_REQUEST_LIMIT,

|

||||

offset=offset

|

||||

)

|

||||

req = urllib.request.Request(url, headers={'Authorization': f'Basic {auth}'})

|

||||

with urllib.request.urlopen(req) as resp:

|

||||

body = resp.read().decode()

|

||||

if len(body) == 0:

|

||||

# All pages read, new response is empty

|

||||

break

|

||||

erfadata = json.loads(body)

|

||||

if erfadata['rows'] == 0:

|

||||

break

|

||||

offset += erfadata['rows']

|

||||

for name, attr in erfadata['results'].items():

|

||||

try:

|

||||

erfa = cls.from_api(ns, name, attr['printouts'], radius)

|

||||

erfas.append(erfa)

|

||||

except BaseException as e:

|

||||

print(e)

|

||||

continue

|

||||

|

||||

# Save to cache

|

||||

with open(target, 'w') as f:

|

||||

json.dump([e.to_dict() for e in erfas], f)

|

||||

return erfas

|

||||

|

||||

@classmethod

|

||||

def from_cache(cls, ns, source, radius):

|

||||

with open(source, 'r') as f:

|

||||

data = json.load(f)

|

||||

return [Erfa.from_dict(ns, d, radius) for d in data]

|

||||

|

||||

|

||||

class Chaostreff(Erfa):

|

||||

|

||||

SMW_CATEGORY = 'Chaostreffs'

|

||||

|

||||

SVG_CLASS = 'chaostreff'

|

||||

SVG_DATA = 'data-chaostreff'

|

||||

SVG_DOTSIZE_ATTR = 'dotsize_treff'

|

||||

SVG_LABEL = False

|

||||

|

||||

|

||||

class BoundingBox:

|

||||

|

|

@ -395,7 +541,7 @@ class BoundingBox:

|

|||

# Basically the weights correspond to geometrical distances,

|

||||

# except for an actual collision, which gets a huge extra weight.

|

||||

for o in other:

|

||||

if o.meta['city'] == self.meta['city']:

|

||||

if o.meta['erfa'] == self.meta['erfa']:

|

||||

continue

|

||||

if o in self:

|

||||

if o.finished:

|

||||

|

|

@ -406,17 +552,19 @@ class BoundingBox:

|

|||

self._optimal = False

|

||||

else:

|

||||

maxs.append(max(pdist*2 - swm.chebyshev_distance(o), 0))

|

||||

for city, location in erfas.items():

|

||||

if city == self.meta['city']:

|

||||

for erfa in erfas:

|

||||

if erfa == self.meta['erfa']:

|

||||

continue

|

||||

location = (erfa.x, erfa.y)

|

||||

if location in swe:

|

||||

w += 1000

|

||||

self._optimal = False

|

||||

else:

|

||||

maxs.append(max(pdist*2 - swe.chebyshev_distance(o), 0))

|

||||

for city, location in chaostreffs.items():

|

||||

if city == self.meta['city']:

|

||||

maxs.append(max(pdist*2 - swe.chebyshev_distance(location), 0))

|

||||

for treff in chaostreffs:

|

||||

if treff == self.meta['erfa']:

|

||||

continue

|

||||

location = (treff.x, treff.y)

|

||||

if location in swc:

|

||||

w += 1000

|

||||

self._optimal = False

|

||||

|

|

@ -439,7 +587,35 @@ class BoundingBox:

|

|||

return f'(({int(self.left)}, {int(self.top)}, {int(self.right)}, {int(self.bottom)}), weight={self.weight})'

|

||||

|

||||

|

||||

def optimize_text_layout(ns, erfas, chaostreffs, size, svg):

|

||||

def compute_bbox(ns):

|

||||

if ns.bbox is not None:

|

||||

return [

|

||||

(min(ns.bbox[0], ns.bbox[2]), min(ns.bbox[1], ns.bbox[3])),

|

||||

(max(ns.bbox[0], ns.bbox[2]), max(ns.bbox[1], ns.bbox[3]))

|

||||

]

|

||||

|

||||

print('Computing map bounding box')

|

||||

bounds = []

|

||||

for path in tqdm.tqdm([ns.cache_directory.erfa_info, ns.cache_directory.chaostreff_info]):

|

||||

erfas = Erfa.from_cache(ns, path, radius=0)

|

||||

for e in erfas:

|

||||

if len(bounds) == 0:

|

||||

bounds.append(e.lon)

|

||||

bounds.append(e.lat)

|

||||

bounds.append(e.lon)

|

||||

bounds.append(e.lat)

|

||||

else:

|

||||

bounds[0] = min(bounds[0], e.lon)

|

||||

bounds[1] = min(bounds[1], e.lat)

|

||||

bounds[2] = max(bounds[2], e.lon)

|

||||

bounds[3] = max(bounds[3], e.lat)

|

||||

return [

|

||||

(bounds[0] - ns.bbox_margin, bounds[1] - ns.bbox_margin),

|

||||

(bounds[2] + ns.bbox_margin, bounds[3] + ns.bbox_margin)

|

||||

]

|

||||

|

||||

|

||||

def optimize_text_layout(ns, erfas, chaostreffs, width, svg):

|

||||

|

||||

# Load the font and measure its various different heights

|

||||

font = ImageFont.truetype(ns.font, ns.font_size)

|

||||

|

|

@ -450,15 +626,15 @@ def optimize_text_layout(ns, erfas, chaostreffs, size, svg):

|

|||

|

||||

# Generate a discrete set of text placement candidates around each erfa dot

|

||||

candidates = {}

|

||||

for city, location in erfas.items():

|

||||

text = city

|

||||

erfax, erfay = location

|

||||

for erfa in erfas:

|

||||

city = erfa.city

|

||||

text = erfa.city

|

||||

for rfrom, to in ns.rename:

|

||||

if rfrom == city:

|

||||

text = to

|

||||

break

|

||||

|

||||

meta = {'city': city, 'text': text, 'baseline': capheight}

|

||||

meta = {'erfa': erfa, 'text': text, 'baseline': capheight}

|

||||

textbox = pil.textbbox((0, 0), text, font=font, anchor='ls') # left, baseline at 0,0

|

||||

mw, mh = textbox[2] - textbox[0], textbox[3] - textbox[1]

|

||||

candidates[city] = []

|

||||

|

|

@ -473,20 +649,20 @@ def optimize_text_layout(ns, erfas, chaostreffs, size, svg):

|

|||

if i == 0:

|

||||

bw -= 0.003

|

||||

bwl, bwr = bw, bw

|

||||

if erfax > 0.8 * size[0]:

|

||||

if erfa.x > 0.8 * width:

|

||||

bwr = bw + 0.001

|

||||

else:

|

||||

bwl = bw + 0.001

|

||||

candidates[city].append(BoundingBox(erfax - dist - mw, erfay + voffset + ns.dotsize_erfa*i*2, width=mw, height=mh, meta=meta, base_weight=bwl))

|

||||

candidates[city].append(BoundingBox(erfax + dist, erfay + voffset + ns.dotsize_erfa*i*2, width=mw, height=mh, meta=meta, base_weight=bwr))

|

||||

candidates[city].append(BoundingBox(erfa.x - dist - mw, erfa.y + voffset + ns.dotsize_erfa*i*2, width=mw, height=mh, meta=meta, base_weight=bwl))

|

||||

candidates[city].append(BoundingBox(erfa.x + dist, erfa.y + voffset + ns.dotsize_erfa*i*2, width=mw, height=mh, meta=meta, base_weight=bwr))

|

||||

# Generate 3 candidates each above and beneath the dot, aligned left, centered and right

|

||||

candidates[city].extend([

|

||||

BoundingBox(erfax - mw/2, erfay - dist - mh, width=mw, height=mh, meta=meta, base_weight=bw + 0.001),

|

||||

BoundingBox(erfax - mw/2, erfay + dist, width=mw, height=mh, meta=meta, base_weight=bw + 0.001),

|

||||

BoundingBox(erfax - ns.dotsize_erfa, erfay - dist - mh, width=mw, height=mh, meta=meta, base_weight=bw + 0.002),

|

||||

BoundingBox(erfax - ns.dotsize_erfa, erfay + dist, width=mw, height=mh, meta=meta, base_weight=bw + 0.003),

|

||||

BoundingBox(erfax + ns.dotsize_erfa - mw, erfay - dist - mh, width=mw, height=mh, meta=meta, base_weight=bw + 0.002),

|

||||

BoundingBox(erfax + ns.dotsize_erfa - mw, erfay + dist, width=mw, height=mh, meta=meta, base_weight=bw + 0.003),

|

||||

BoundingBox(erfa.x - mw/2, erfa.y - dist - mh, width=mw, height=mh, meta=meta, base_weight=bw + 0.001),

|

||||

BoundingBox(erfa.x - mw/2, erfa.y + dist, width=mw, height=mh, meta=meta, base_weight=bw + 0.001),

|

||||

BoundingBox(erfa.x - ns.dotsize_erfa, erfa.y - dist - mh, width=mw, height=mh, meta=meta, base_weight=bw + 0.002),

|

||||

BoundingBox(erfa.x - ns.dotsize_erfa, erfa.y + dist, width=mw, height=mh, meta=meta, base_weight=bw + 0.003),

|

||||

BoundingBox(erfa.x + ns.dotsize_erfa - mw, erfa.y - dist - mh, width=mw, height=mh, meta=meta, base_weight=bw + 0.002),

|

||||

BoundingBox(erfa.x + ns.dotsize_erfa - mw, erfa.y + dist, width=mw, height=mh, meta=meta, base_weight=bw + 0.003),

|

||||

])

|

||||

|

||||

# If debugging is enabled, render one rectangle around each label's bounding box, and one rectangle around each label's median box

|

||||

|

|

@ -500,7 +676,7 @@ def optimize_text_layout(ns, erfas, chaostreffs, size, svg):

|

|||

svg.append(dr)

|

||||

|

||||

|

||||

unfinished = {c for c in erfas.keys()}

|

||||

unfinished = {e.city for e in erfas}

|

||||

finished = {}

|

||||

|

||||

# Greedily choose a candidate for each label

|

||||

|

|

@ -527,7 +703,7 @@ def optimize_text_layout(ns, erfas, chaostreffs, size, svg):

|

|||

|

||||

# If no candidate with at least one optimal solution is left, go by global minimum

|

||||

minbox = min(unfinished_boxes, key=lambda box: box.weight)

|

||||

mincity = minbox.meta['city']

|

||||

mincity = minbox.meta['erfa'].city

|

||||

finished[mincity] = minbox

|

||||

minbox.finished = True

|

||||

unfinished.discard(mincity)

|

||||

|

|

@ -553,9 +729,7 @@ def optimize_text_layout(ns, erfas, chaostreffs, size, svg):

|

|||

return finished

|

||||

|

||||

|

||||

def create_imagemap(ns, size, parent,

|

||||

erfas, erfa_urls, erfa_names, texts,

|

||||

chaostreffs, chaostreff_urls, chaostreff_names):

|

||||

def create_imagemap(ns, size, parent, erfas, chaostreffs, texts):

|

||||

s = ns.png_scale

|

||||

img = etree.Element('img',

|

||||

src=ns.output_directory.rel(ns.output_directory.png_path),

|

||||

|

|

@ -563,34 +737,24 @@ def create_imagemap(ns, size, parent,

|

|||

width=str(size[0]*s), height=str(size[1]*s))

|

||||

imgmap = etree.Element('map', name='erfamap')

|

||||

|

||||

for city, location in erfas.items():

|

||||

if city not in erfa_urls:

|

||||

for erfa in erfas:

|

||||

if erfa.web is None:

|

||||

continue

|

||||

box = texts[city]

|

||||

area = etree.Element('area',

|

||||

shape='circle',

|

||||

coords=f'{location[0]*s},{location[1]*s},{ns.dotsize_erfa*s}',

|

||||

href=erfa_urls[city])

|

||||

area2 = etree.Element('area',

|

||||

shape='rect',

|

||||

coords=f'{box.left*s},{box.top*s},{box.right*s},{box.bottom*s}',

|

||||

href=erfa_urls[city])

|

||||

if city in erfa_names:

|

||||

area.set('title', erfa_names[city])

|

||||

area2.set('title', erfa_names[city])

|

||||

coords=f'{erfa.x*s},{erfa.y*s},{erfa.radius*s}',

|

||||

href=erfa.web,

|

||||

title=erfa.display_name)

|

||||

imgmap.append(area)

|

||||

imgmap.append(area2)

|

||||

|

||||

for city, location in chaostreffs.items():

|

||||

if city not in chaostreff_urls:

|

||||

continue

|

||||

area = etree.Element('area',

|

||||

shape='circle',

|

||||

coords=f'{location[0]*s},{location[1]*s},{ns.dotsize_treff*s}',

|

||||

href=chaostreff_urls[city])

|

||||

if city in chaostreff_names:

|

||||

area.set('title', chaostreff_names[city])

|

||||

imgmap.append(area)

|

||||

if erfa.city in texts:

|

||||

box = texts[erfa.city]

|

||||

area2 = etree.Element('area',

|

||||

shape='rect',

|

||||

coords=f'{box.left*s},{box.top*s},{box.right*s},{box.bottom*s}',

|

||||

href=erfa.web,

|

||||

title=erfa.display_name)

|

||||

imgmap.append(area2)

|

||||

|

||||

parent.append(img)

|

||||

parent.append(imgmap)

|

||||

|

|

@ -600,110 +764,26 @@ def create_svg(ns, bbox):

|

|||

print('Creating SVG image')

|

||||

|

||||

# Convert from WGS84 lon, lat to chosen projection

|

||||

transformer = pyproj.Transformer.from_crs('epsg:4326', ns.projection)

|

||||

scalex = ns.scale_x

|

||||

scaley = ns.scale_y

|

||||

blt = transformer.transform(*bbox[0])

|

||||

trt = transformer.transform(*bbox[1])

|

||||

trans_bounding_box = [

|

||||

(scalex*blt[0], scaley*trt[1]),

|

||||

(scalex*trt[0], scaley*blt[1])

|

||||

]

|

||||

origin = trans_bounding_box[0]

|

||||

svg_box = (trans_bounding_box[1][0] - origin[0], origin[1] - trans_bounding_box[1][1])

|

||||

|

||||

# Load state border lines from cached JSON files

|

||||

shapes_states = []

|

||||

files = os.listdir(ns.cache_directory.shapes_states)

|

||||

for f in files:

|

||||

if not f.endswith('.json'):

|

||||

continue

|

||||

path = os.path.join(ns.cache_directory.shapes_states, f)

|

||||

with open(path, 'r') as sf:

|

||||

shapedata = sf.read()

|

||||

shape = json.loads(shapedata)

|

||||

name = shape['description']['en']

|

||||

geo = shape['data']['features'][0]['geometry']

|

||||

if geo['type'] == 'Polygon':

|

||||

geo['coordinates'] = [geo['coordinates']]

|

||||

for poly in geo['coordinates']:

|

||||

ts = []

|

||||

for x, y in poly[0]:

|

||||

xt, yt = transformer.transform(x, y)

|

||||

ts.append((xt*scalex - origin[0], origin[1] - yt*scaley))

|

||||

shapes_states.append((name, ts))

|

||||

|

||||

# Load country border lines from cached JSON files

|

||||

shapes_countries = []

|

||||

files = os.listdir(ns.cache_directory.shapes_filtered)

|

||||

for f in files:

|

||||

if not f.endswith('.json'):

|

||||

continue

|

||||

path = os.path.join(ns.cache_directory.shapes_filtered, f)

|

||||

with open(path, 'r') as sf:

|

||||

shapedata = sf.read()

|

||||

shape = json.loads(shapedata)

|

||||

name = shape['description']['en']

|

||||

geo = shape['data']['features'][0]['geometry']

|

||||

if geo['type'] == 'Polygon':

|

||||

geo['coordinates'] = [geo['coordinates']]

|

||||

for poly in geo['coordinates']:

|

||||

ts = []

|

||||

for x, y in poly[0]:

|

||||

xt, yt = transformer.transform(x, y)

|

||||

ts.append((xt*scalex - origin[0], origin[1] - yt*scaley))

|

||||

shapes_countries.append((name, ts))

|

||||

|

||||

# Load Erfa infos from cached JSON files

|

||||

erfas = {}

|

||||

erfa_urls = {}

|

||||

erfa_names = {}

|

||||

with open(ns.cache_directory.erfa_info, 'r') as f:

|

||||

ctdata = json.load(f)

|

||||

for city, data in ctdata.items():

|

||||

location = data.get('location')

|

||||

if location is None:

|

||||

continue

|

||||

xt, yt = transformer.transform(*location)

|

||||

erfas[city] = (xt*scalex - origin[0], origin[1] - yt*scaley)

|

||||

web = data.get('web')

|

||||

if web is not None:

|

||||

erfa_urls[city] = web

|

||||

name = data.get('name')

|

||||

if name is not None:

|

||||

erfa_names[city] = name

|

||||

|

||||

# Load Chaostreff infos from cached JSON files

|

||||

chaostreffs = {}

|

||||

chaostreff_urls = {}

|

||||

chaostreff_names = {}

|

||||

with open(ns.cache_directory.chaostreff_info, 'r') as f:

|

||||

ctdata = json.load(f)

|

||||

for city, data in ctdata.items():

|

||||

location = data.get('location')

|

||||

if location is None:

|

||||

continue

|

||||

if city in erfas:

|

||||

# There is an edge case where when a space changed states between Erfa and Chaostreff, the

|

||||

# Semantic MediaWiki engine returns this space as both an Erfa and a Chaostreff, resulting

|

||||

# in glitches in the rendering. As a workaround, here we simply assume that it's an Erfa.

|

||||

continue

|

||||

xt, yt = transformer.transform(*location)

|

||||

chaostreffs[city] = (xt*scalex - origin[0], origin[1] - yt*scaley)

|

||||

web = data.get('web')

|

||||

if web is not None:

|

||||

chaostreff_urls[city] = web

|

||||

name = data.get('name')

|

||||

if name is not None:

|

||||

chaostreff_names[city] = name

|

||||

|

||||

svg_box = ns.projection.setup(ns.scale_x, ns.scale_y, bbox=bbox)

|

||||

rectbox = [0, 0, svg_box[0], svg_box[1]]

|

||||

for name, shape in shapes_states + shapes_countries:

|

||||

for lon, lat in shape:

|

||||

rectbox[0] = min(lon, rectbox[0])

|

||||

rectbox[1] = min(lat, rectbox[1])

|

||||

rectbox[2] = max(lon, rectbox[2])

|

||||

rectbox[3] = max(lat, rectbox[3])

|

||||

|

||||

# Load everything from cached JSON files

|

||||

countries = Country.from_cache(ns, ns.cache_directory.shapes_filtered)

|

||||

states = FederalState.from_cache(ns, ns.cache_directory.shapes_states)

|

||||

erfas = Erfa.from_cache(ns, ns.cache_directory.erfa_info, ns.dotsize_erfa)

|

||||

chaostreffs = Chaostreff.from_cache(ns, ns.cache_directory.chaostreff_info, ns.dotsize_treff)

|

||||

# There is an edge case where when a space changed states between Erfa and Chaostreff, the

|

||||

# Semantic MediaWiki engine returns this space as both an Erfa and a Chaostreff, resulting

|

||||

# in glitches in the rendering. As a workaround, here we simply assume that it's an Erfa.

|

||||

chaostreffs = [c for c in chaostreffs if c not in erfas]

|

||||

|

||||

for c in states + countries:

|

||||

for poly in c.polygons:

|

||||

for x, y in poly:

|

||||

rectbox[0] = min(x, rectbox[0])

|

||||

rectbox[1] = min(y, rectbox[1])

|

||||

rectbox[2] = max(x, rectbox[2])

|

||||

rectbox[3] = max(y, rectbox[3])

|

||||

|

||||

print('Copying stylesheet and font')

|

||||

dst = os.path.join(ns.output_directory.path, ns.stylesheet)

|

||||

|

|

@ -731,65 +811,19 @@ def create_svg(ns, bbox):

|

|||

bg.set('class', 'background')

|

||||

svg.append(bg)

|

||||

|

||||

# Render country borders

|

||||

for name, shape in shapes_countries:

|

||||

points = ' '.join([f'{lon},{lat}' for lon, lat in shape])

|

||||

poly = etree.Element('polygon', points=points)

|

||||

poly.set('class', 'country')

|

||||

poly.set('data-country', name)

|

||||

svg.append(poly)

|

||||

|

||||

# Render state borders

|

||||

# Render shortest shapes last s.t. Berlin, Hamburg and Bremen are rendered on top of their surrounding states

|

||||

for name, shape in sorted(shapes_states, key=lambda x: -sum(len(s) for s in x[1])):

|

||||

points = ' '.join([f'{lon},{lat}' for lon, lat in shape])

|

||||

poly = etree.Element('polygon', points=points)

|

||||

poly.set('class', 'state')

|

||||

poly.set('data-state', name)

|

||||

svg.append(poly)

|

||||

|

||||

# This can take some time, especially if lots of candidates are generated

|

||||

print('Layouting labels')

|

||||

texts = optimize_text_layout(ns, erfas, chaostreffs, (int(svg_box[0]), int(svg_box[1])), svg)

|

||||

texts = optimize_text_layout(ns, erfas, chaostreffs, width=svg_box[0], svg=svg)

|

||||

|

||||

# Render shortest shapes last s.t. Berlin, Hamburg and Bremen are rendered on top of their surrounding states

|

||||

states = sorted(states, key=lambda x: -len(x))

|

||||

# Render country and state borders

|

||||

for c in countries + states:

|

||||

c.render(svg, texts)

|

||||

|

||||

# Render Erfa dots and their labels

|

||||

for city, location in erfas.items():

|

||||

box = texts[city]

|

||||

if city in erfa_urls:

|

||||

group = etree.Element('a', href=erfa_urls[city], target='_blank')

|

||||

else:

|

||||

group = etree.Element('g')

|

||||

group.set('data-erfa', city)

|

||||

circle = etree.Element('circle', cx=str(location[0]), cy=str(location[1]), r=str(ns.dotsize_erfa))

|

||||

circle.set('class', 'erfa')

|

||||

circle.set('data-erfa', city)

|

||||

if city in erfa_names:

|

||||

title = etree.Element('title')

|

||||

title.text = erfa_names[city]

|

||||

circle.append(title)

|

||||

group.append(circle)

|

||||

text = etree.Element('text', x=str(box.left), y=str(box.top + box.meta['baseline']))

|

||||

text.set('class', 'erfalabel')

|

||||

text.set('data-erfa', city)

|

||||

text.text = box.meta['text']

|

||||

group.append(text)

|

||||

svg.append(group)

|

||||

|

||||

# Render Chaostreff dots

|

||||

for city, location in chaostreffs.items():

|

||||

circle = etree.Element('circle', cx=str(location[0]), cy=str(location[1]), r=str(ns.dotsize_treff))

|

||||

circle.set('class', 'chaostreff')

|

||||

circle.set('data-chaostreff', city)

|

||||

if city in chaostreff_names:

|

||||

title = etree.Element('title')

|

||||

title.text = chaostreff_names[city]

|

||||

circle.append(title)

|

||||

if city in chaostreff_urls:

|

||||

a = etree.Element('a', href=chaostreff_urls[city], target='_blank')

|

||||

a.append(circle)

|

||||

svg.append(a)

|

||||

else:

|

||||

svg.append(circle)

|

||||

for erfa in erfas + chaostreffs:

|

||||

erfa.render(svg, texts)

|

||||

|

||||

# Generate SVG, PNG and HTML output files

|

||||

|

||||

|

|

@ -812,9 +846,7 @@ def create_svg(ns, bbox):

|

|||

obj = etree.Element('object',

|

||||

data=ns.output_directory.rel(ns.output_directory.svg_path),

|

||||

width=str(svg_box[0]), height=str(svg_box[1]))

|

||||

create_imagemap(ns, svg_box, obj,

|

||||

erfas, erfa_urls, erfa_names, texts,

|

||||

chaostreffs, chaostreff_urls, chaostreff_names)

|

||||

create_imagemap(ns, svg_box, obj, erfas, chaostreffs, texts)

|

||||

body.append(obj)

|

||||

html.append(body)

|

||||

with open(ns.output_directory.html_path, 'wb') as f:

|

||||

|

|

@ -830,9 +862,7 @@ def create_svg(ns, bbox):

|

|||

body = etree.Element('body')

|

||||

html.append(body)

|

||||

|

||||

create_imagemap(ns, svg_box, body,

|

||||

erfas, erfa_urls, erfa_names, texts,

|

||||

chaostreffs, chaostreff_urls, chaostreff_names)

|

||||

create_imagemap(ns, svg_box, body, erfas, chaostreffs, texts)

|

||||

|

||||

with open(ns.output_directory.imagemap_path, 'wb') as f:

|

||||

f.write(b'<!DOCTYLE html>\n')

|

||||

|

|

@ -856,7 +886,7 @@ def main():

|

|||

ap.add_argument('--dotsize-erfa', type=float, default=13, help='Radius of Erfa dots')

|

||||

ap.add_argument('--dotsize-treff', type=float, default=8, help='Radius of Chaostreff dots')

|

||||

ap.add_argument('--rename', type=str, action='append', nargs=2, metavar=('FROM', 'TO'), default=[], help='Rename a city with an overly long name (e.g. "Rothenburg ob der Tauber" to "Rothenburg")')

|

||||

ap.add_argument('--projection', type=str, default='epsg:4258', help='Map projection to convert the WGS84 coordinates to')

|

||||

ap.add_argument('--projection', type=CoordinateTransform, default='epsg:4258', help='Map projection to convert the WGS84 coordinates to')

|

||||

ap.add_argument('--scale-x', type=float, default=130, help='X axis scale to apply after projecting')

|

||||

ap.add_argument('--scale-y', type=float, default=200, help='Y axis scale to apply after projecting')

|

||||

ap.add_argument('--png-scale', type=float, default=1.0, help='Scale of the PNG image')

|

||||

|

|

@ -867,26 +897,31 @@ def main():

|

|||

if ns.update_borders or not os.path.isdir(ns.cache_directory.shapes_countries):

|

||||

if os.path.isdir(ns.cache_directory.shapes_countries):

|

||||

shutil.rmtree(ns.cache_directory.shapes_countries)

|

||||

fetch_wikidata_countries(target=ns.cache_directory.shapes_countries)

|

||||

print('Retrieving country border shapes')

|

||||

Country.fetch(target=ns.cache_directory.shapes_countries)

|

||||

|

||||

if ns.update_borders or not os.path.isdir(ns.cache_directory.shapes_states):

|

||||

if os.path.isdir(ns.cache_directory.shapes_states):

|

||||

shutil.rmtree(ns.cache_directory.shapes_states)

|

||||

fetch_wikidata_states(target=ns.cache_directory.shapes_states)

|

||||

print('Retrieving state border shapes')

|

||||

FederalState.fetch(target=ns.cache_directory.shapes_states)

|

||||

|

||||

if ns.update_erfalist or not os.path.isfile(ns.cache_directory.erfa_info):

|

||||

if os.path.exists(ns.cache_directory.erfa_info):

|

||||

os.unlink(ns.cache_directory.erfa_info)

|

||||

fetch_erfas(target=ns.cache_directory.erfa_info, url=ERFA_URL)

|

||||

print('Retrieving Erfas information')

|

||||

Erfa.fetch(ns, target=ns.cache_directory.erfa_info, radius=ns.dotsize_erfa)

|

||||

|

||||

if ns.update_erfalist or not os.path.isfile(ns.cache_directory.chaostreff_info):

|

||||

if os.path.exists(ns.cache_directory.chaostreff_info):

|

||||

os.unlink(ns.cache_directory.chaostreff_info)

|

||||

fetch_erfas(target=ns.cache_directory.chaostreff_info, url=CHAOSTREFF_URL)

|

||||

print('Retrieving Chaostreffs information')

|

||||

Chaostreff.fetch(target=ns.cache_directory.chaostreff_info, radius=ns.dotsize_treff)

|

||||

|

||||

bbox = compute_bbox(ns)

|

||||

|

||||

filter_boundingbox(ns.cache_directory.shapes_countries, ns.cache_directory.shapes_filtered, bbox)

|

||||

print('Filtering countries outside the bounding box')

|

||||

Country.filter_boundingbox(ns, ns.cache_directory.shapes_countries, ns.cache_directory.shapes_filtered, bbox)

|

||||

|

||||

create_svg(ns, bbox)

|

||||

|

||||

|

|

|

|||

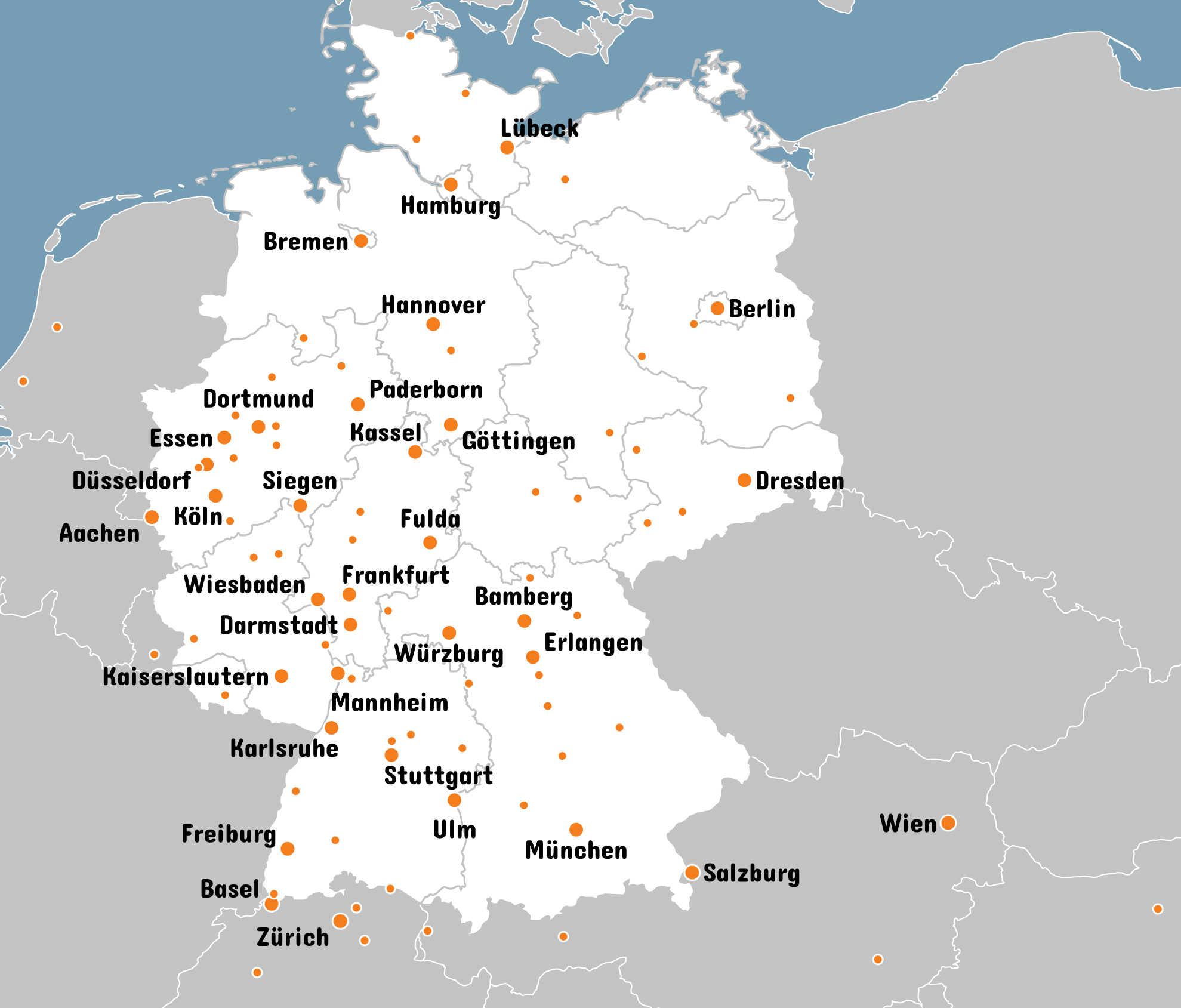

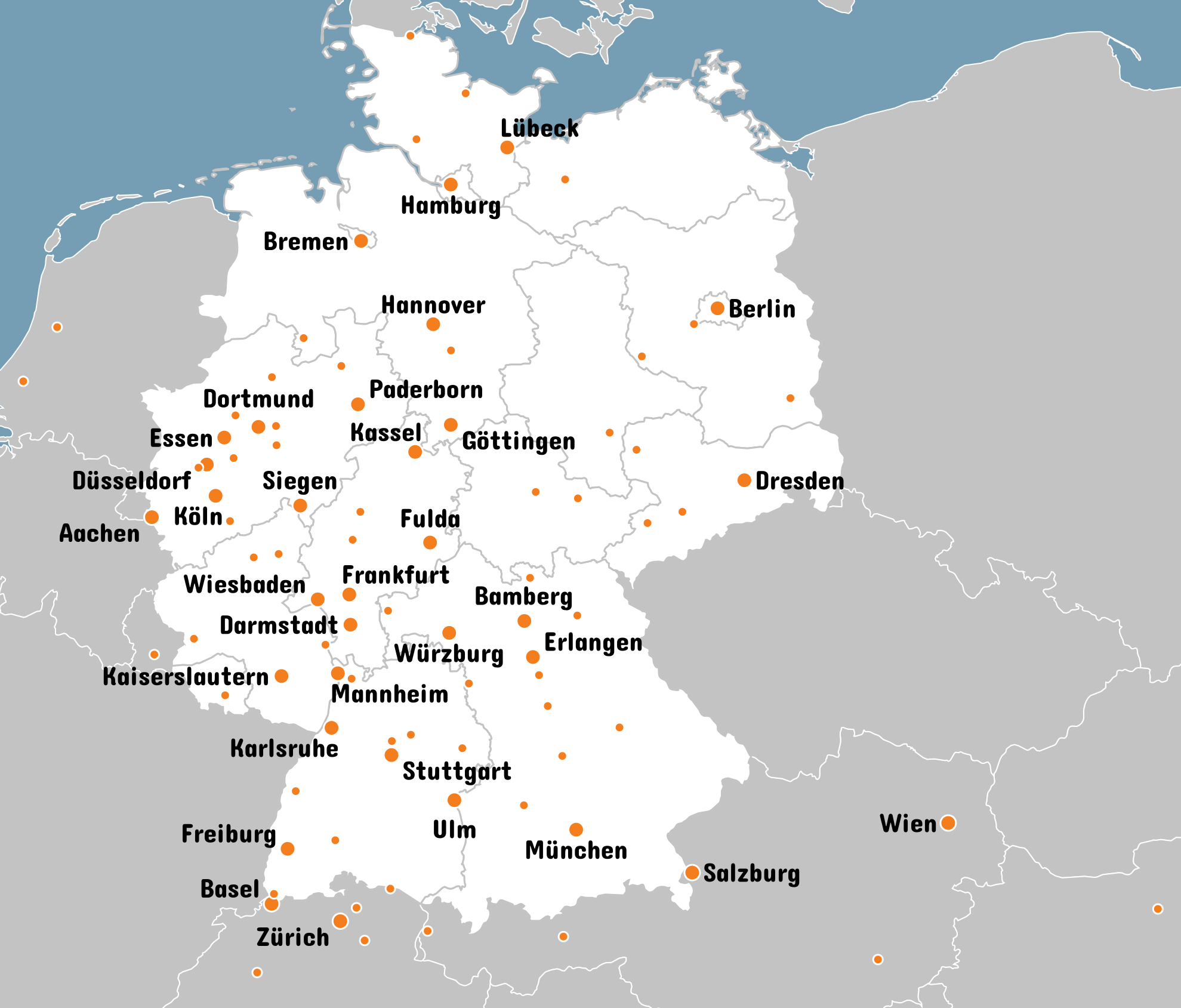

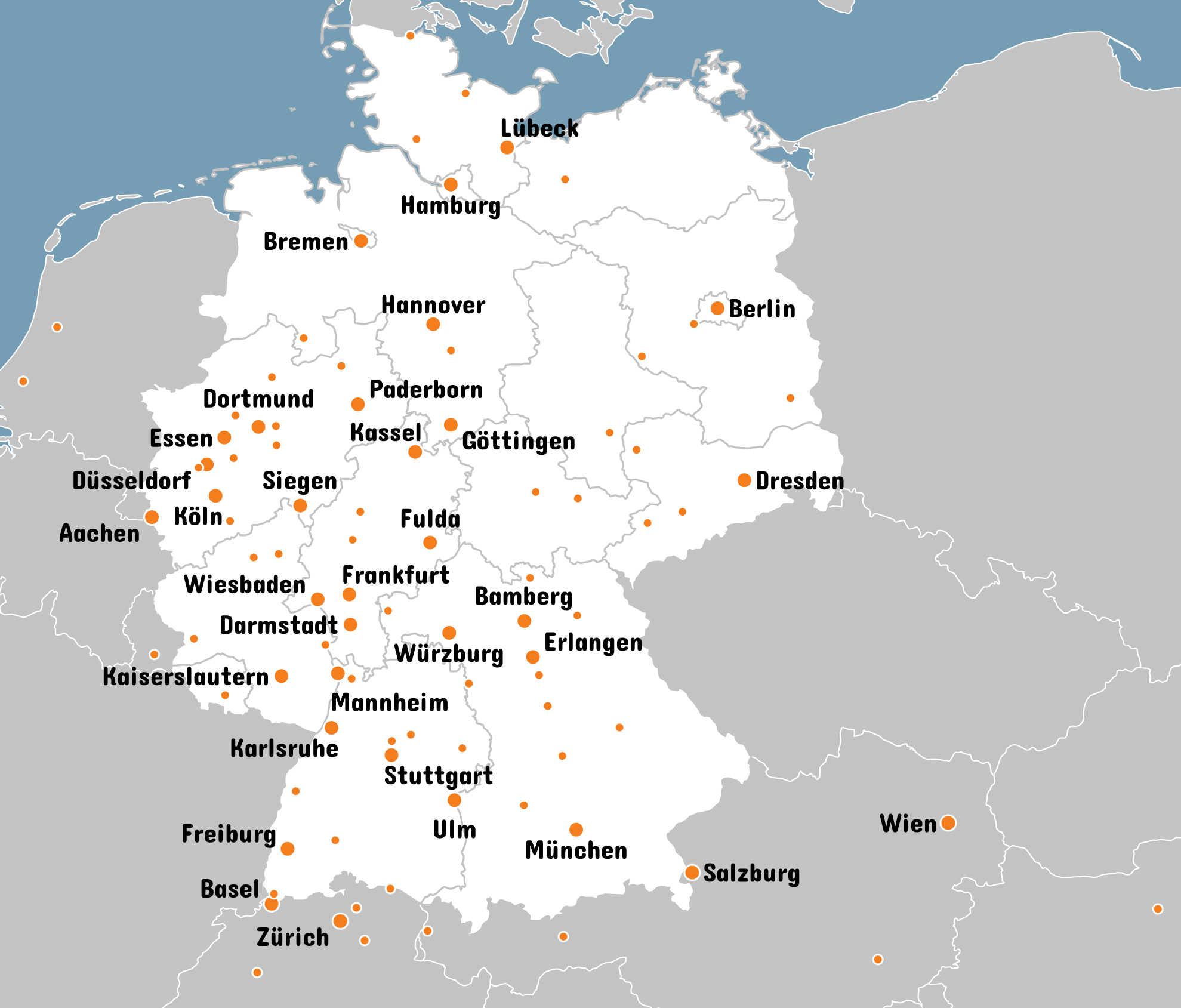

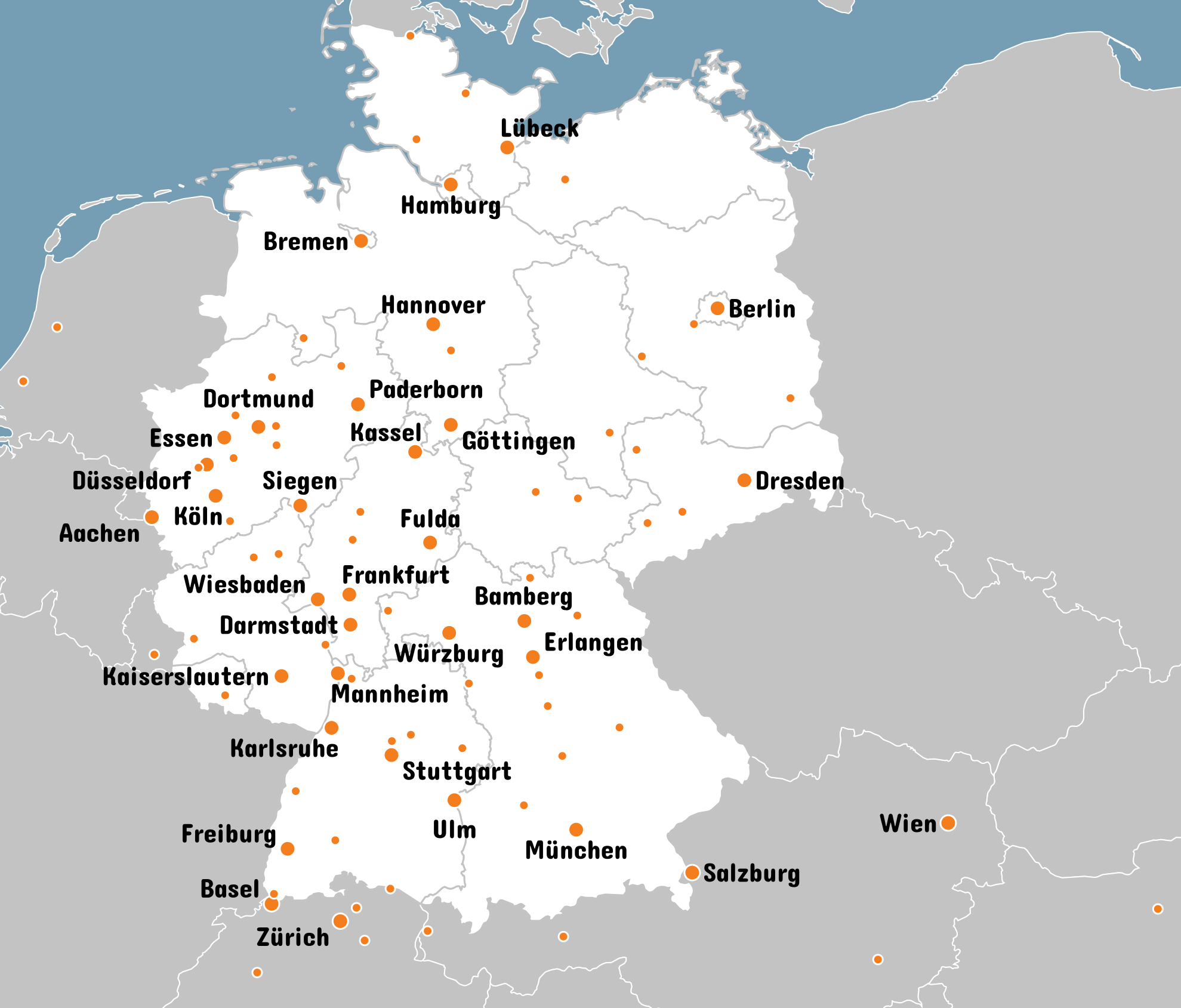

BIN

map.readme.png

BIN

map.readme.png

Binary file not shown.

|

Before

(image error) Size: 428 KiB After

(image error) Size: 428 KiB

|

Loading…

Add table

Reference in a new issue